Modeling Kata here again ! Models are useful to describe things, systems,

knowledge, basically any information you want to organize and formalize will gain in using a solid formalism like Ecore.

Structuring and describing is nice, but then most of the time you need to evaluate your design. You basically have choices here, one of them is using the validation tools so that any "error in your design" is shown to you and so that you can fix it. The drawback of validation is that you can't easily get the big picture of your design quality corresponding to the constraints you defined.

Who can say that this bees invading my garden are organized in a nice or poor way ? That's definitely not a binary information.

Another approach is designing your models with tooling updating or self-constructing other part to gives you information about its quality. Let's take a (quite naive but still interesting ;) ) example :

I defined a formalism for a "flow-like" langage, you can use it to describe DataSources and Processors linked by DataFlows. Processors and DataFlows are capacity bounded, which mean they've got a maximum capacity and under given load will be iddling or over used.

Here is a class diagram displaying the simplest parts of the flow.ecore :

Here I'm mixing both the information I'll describe (a

given system with datasources, processors and flows) and the feedback about my design (the flow element usage).

Here I'm mixing both the information I'll describe (a

given system with datasources, processors and flows) and the feedback about my design (the flow element usage).

Note that every element here might be activated or not (see the FlowElementStatus enumeration).

Now to define my rules updating each values considering the overall model, I basically have the choice either to implement that in Java, or use a Rules Engine. Implementing in Java might look like a good idea but you'll quickly realise that :

You bet I picked the Rules Engine, so that I can get my hands dirty with those strange beasts you (most of the time) never ever want to meet again after you graduated. I picked JBoss Drools which seemd just nice, powerfull, and based on an implementation of a Rete-like algorithm - which make it fast - and I have to admit I liked their logo is really cool.

EMF and Drools are going along really nicely. Drools considers your Java instances as facts and is then really easy to integrate with EMF. EMF provides the generic notification mechanism which make it really easy to integrate with drools so that drools know that something changed and that he might have some work to do.

Here is the result, let's design a flow related to my work :

Freddy is a datasource which produces lots of information (8). He's inactive

right now (see the red icon ;) )

Freddy is a datasource which produces lots of information (8). He's inactive

right now (see the red icon ;) )

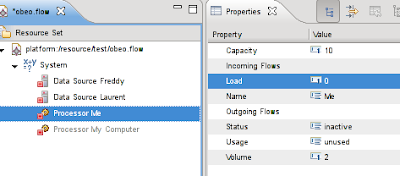

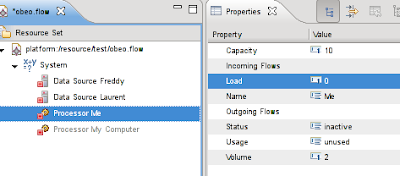

Let's add Laurent which is way more quiet, "Me"

which is a processor both being a flow target and a flow source, as I'm providing data to "My Computer". My Capacity is 10, my computer has way more capacity than

me.

Let's add Laurent which is way more quiet, "Me"

which is a processor both being a flow target and a flow source, as I'm providing data to "My Computer". My Capacity is 10, my computer has way more capacity than

me.

Let's connect everybody with DataFlows, each of them having a max capacity of 10.

Freddy and Laurent are both connected to Me, and I'm connected to My Computer.

Everybody is inactive, let's activate some

part of the system : Me and My

Computer.

Everybody is inactive, let's activate some

part of the system : Me and My

Computer.

As long as Freddy and Laurent are not there,

everything is fine, my usage is "low".

As long as Freddy and Laurent are not there,

everything is fine, my usage is "low".

Activating elements in the editor, everything gets updated "on the fly" and the labels are reflecting the current usage of an element.

Now Laurent is activated. Everything is still fine (you kind a guess the next step,

right ? ;) )

Now Laurent is activated. Everything is still fine (you kind a guess the next step,

right ? ;) )

Freddy is activated, I'm over stressed (see the red) and even the dataflow from

Freddy to me has quite an high usage

(orange color).

Freddy is activated, I'm over stressed (see the red) and even the dataflow from

Freddy to me has quite an high usage

(orange color).

So now I have different options, I can redesign my system in a way that the capacities are higher (for me and/or the dataflow), or split parts of the Freddy flow and distribute it on other processors. I'll be able to try every solution, activate/desactivate elements, and see if my system is meeting my constraints or not.

Now let's have a look on how I did that...

Here are the rules I'm using, quite straightforward and it's easy to put more rules expressing really complicated constraints.

This language is dedicated to the logic rules

definition and is, as a matter of fact, good at it.

This language is dedicated to the logic rules

definition and is, as a matter of fact, good at it.

Mixing drools and EMF has just been about setting up an adapter on my resource when loading the model, then if something gets updated, EMF tell drools which instance changed, and drools fire the corresponding rules, chaining them if needed.

I'm not the first one doing that, googling a bit you'll find papers.

Quick reminder of what's nice with this approach :

Structuring and describing is nice, but then most of the time you need to evaluate your design. You basically have choices here, one of them is using the validation tools so that any "error in your design" is shown to you and so that you can fix it. The drawback of validation is that you can't easily get the big picture of your design quality corresponding to the constraints you defined.

Who can say that this bees invading my garden are organized in a nice or poor way ? That's definitely not a binary information.

Another approach is designing your models with tooling updating or self-constructing other part to gives you information about its quality. Let's take a (quite naive but still interesting ;) ) example :

I defined a formalism for a "flow-like" langage, you can use it to describe DataSources and Processors linked by DataFlows. Processors and DataFlows are capacity bounded, which mean they've got a maximum capacity and under given load will be iddling or over used.

Here is a class diagram displaying the simplest parts of the flow.ecore :

Here I'm mixing both the information I'll describe (a

given system with datasources, processors and flows) and the feedback about my design (the flow element usage).

Here I'm mixing both the information I'll describe (a

given system with datasources, processors and flows) and the feedback about my design (the flow element usage).Note that every element here might be activated or not (see the FlowElementStatus enumeration).

Now to define my rules updating each values considering the overall model, I basically have the choice either to implement that in Java, or use a Rules Engine. Implementing in Java might look like a good idea but you'll quickly realise that :

- adapting the rules to a constraints which are specific for a project will make you redeploy everything

- you'll write code to browse everywhere in the model and update the values depending on your browsing result, and with big models you'll get poor performances

You bet I picked the Rules Engine, so that I can get my hands dirty with those strange beasts you (most of the time) never ever want to meet again after you graduated. I picked JBoss Drools which seemd just nice, powerfull, and based on an implementation of a Rete-like algorithm - which make it fast - and I have to admit I liked their logo is really cool.

EMF and Drools are going along really nicely. Drools considers your Java instances as facts and is then really easy to integrate with EMF. EMF provides the generic notification mechanism which make it really easy to integrate with drools so that drools know that something changed and that he might have some work to do.

Here is the result, let's design a flow related to my work :

Freddy is a datasource which produces lots of information (8). He's inactive

right now (see the red icon ;) )

Freddy is a datasource which produces lots of information (8). He's inactive

right now (see the red icon ;) ) Let's add Laurent which is way more quiet, "Me"

which is a processor both being a flow target and a flow source, as I'm providing data to "My Computer". My Capacity is 10, my computer has way more capacity than

me.

Let's add Laurent which is way more quiet, "Me"

which is a processor both being a flow target and a flow source, as I'm providing data to "My Computer". My Capacity is 10, my computer has way more capacity than

me.Let's connect everybody with DataFlows, each of them having a max capacity of 10.

Freddy and Laurent are both connected to Me, and I'm connected to My Computer.

Everybody is inactive, let's activate some

part of the system : Me and My

Computer.

Everybody is inactive, let's activate some

part of the system : Me and My

Computer. As long as Freddy and Laurent are not there,

everything is fine, my usage is "low".

As long as Freddy and Laurent are not there,

everything is fine, my usage is "low".Activating elements in the editor, everything gets updated "on the fly" and the labels are reflecting the current usage of an element.

Now Laurent is activated. Everything is still fine (you kind a guess the next step,

right ? ;) )

Now Laurent is activated. Everything is still fine (you kind a guess the next step,

right ? ;) ) Freddy is activated, I'm over stressed (see the red) and even the dataflow from

Freddy to me has quite an high usage

(orange color).

Freddy is activated, I'm over stressed (see the red) and even the dataflow from

Freddy to me has quite an high usage

(orange color).So now I have different options, I can redesign my system in a way that the capacities are higher (for me and/or the dataflow), or split parts of the Freddy flow and distribute it on other processors. I'll be able to try every solution, activate/desactivate elements, and see if my system is meeting my constraints or not.

Now let's have a look on how I did that...

Here are the rules I'm using, quite straightforward and it's easy to put more rules expressing really complicated constraints.

This language is dedicated to the logic rules

definition and is, as a matter of fact, good at it.

This language is dedicated to the logic rules

definition and is, as a matter of fact, good at it.Mixing drools and EMF has just been about setting up an adapter on my resource when loading the model, then if something gets updated, EMF tell drools which instance changed, and drools fire the corresponding rules, chaining them if needed.

I'm not the first one doing that, googling a bit you'll find papers.

Quick reminder of what's nice with this approach :

- not re-inventing the wheel again

- great expressiveness for your rules

- great performances even with many many many rules

- rules are easy to customize and you don't need to change your code to consider new construction rules.